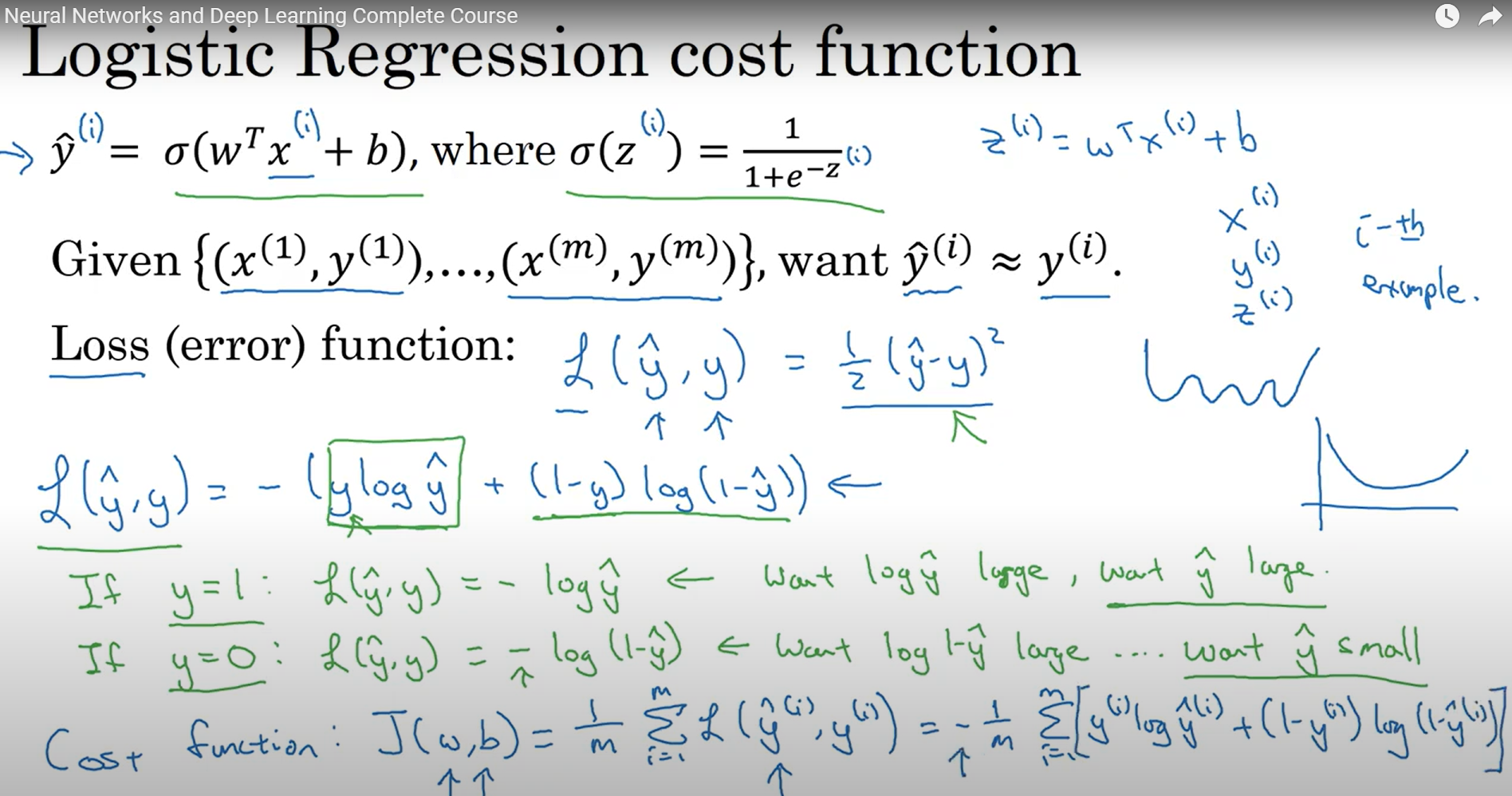

Logistic Regression loss function $L(y, \hat{y})$

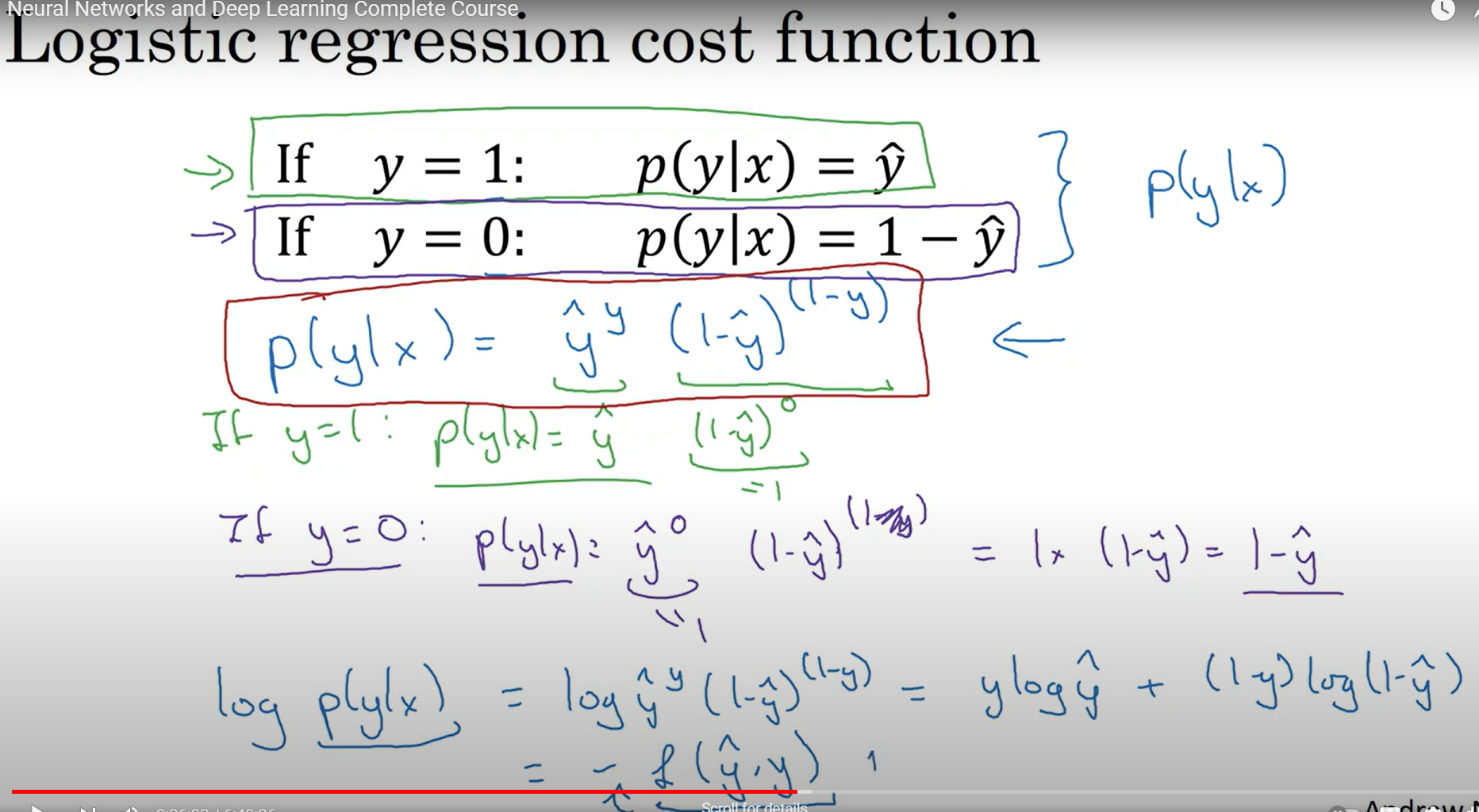

The logistic loss function, also known as the cross-entropy loss function, is commonly used in binary classification problems.

It measures the difference between the predicted probability distribution and the actual distribution of the target variable. The formula for the logistic loss function is given by:

\(L(y, \hat{y}) = y \log(\hat{y}) + (1 - y) \log(1 - \hat{y})\)

where:

- $y$ is the true label of the example,

- $\hat{y}$ is the predicted probability of the example belonging to the positive class.

Logistic loss function explained

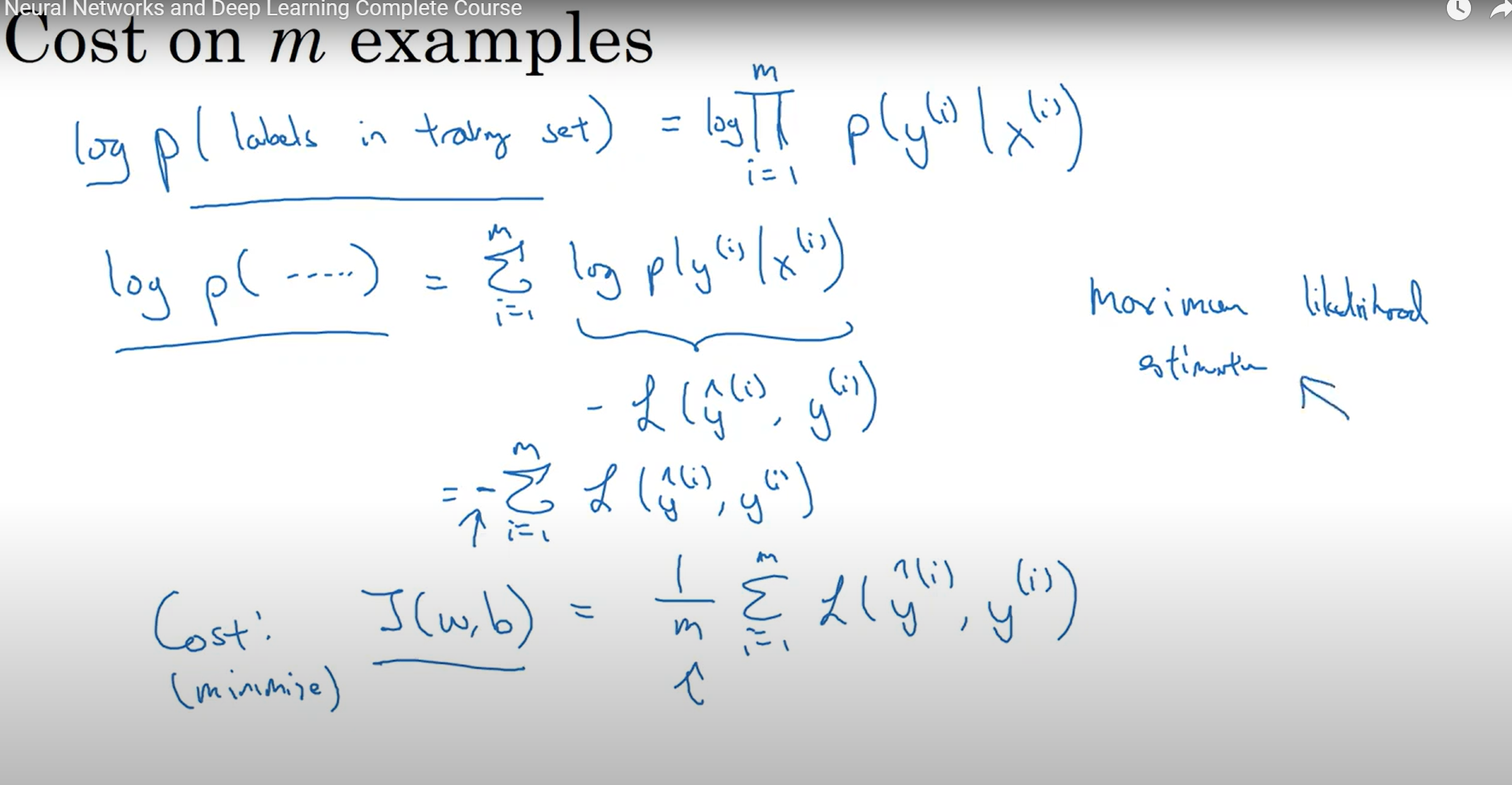

Cost function $J(w, b)$