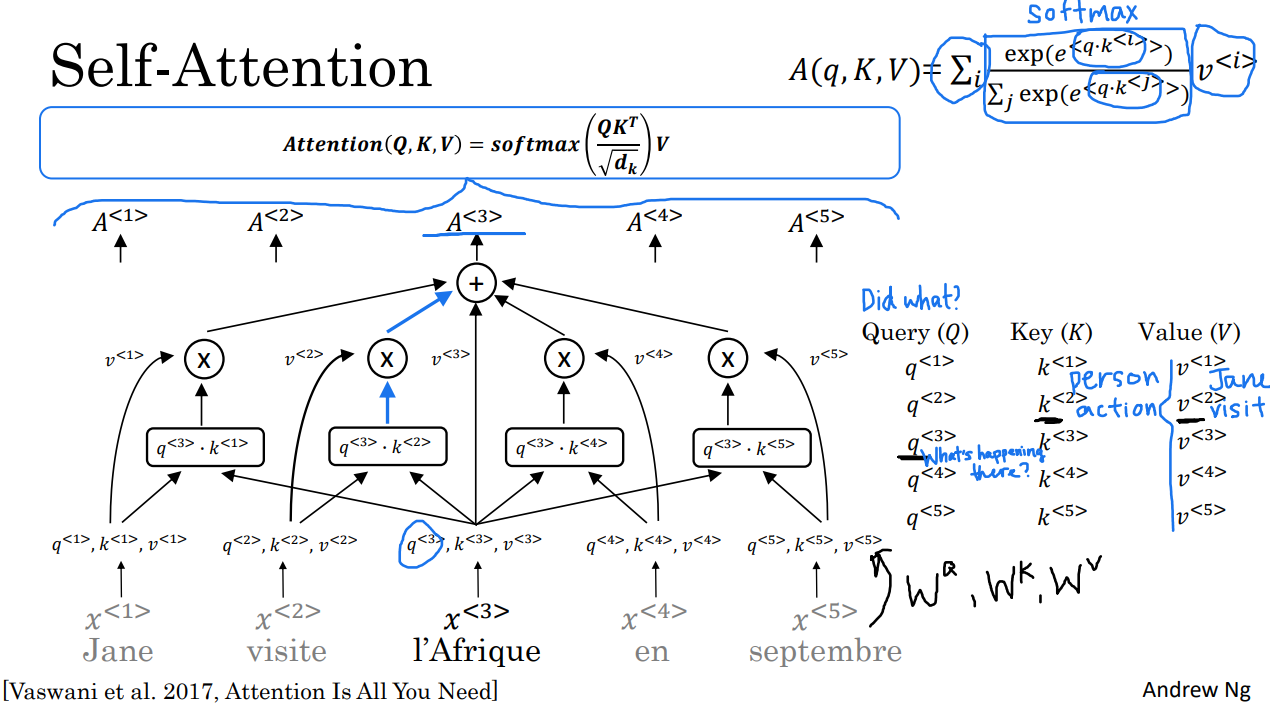

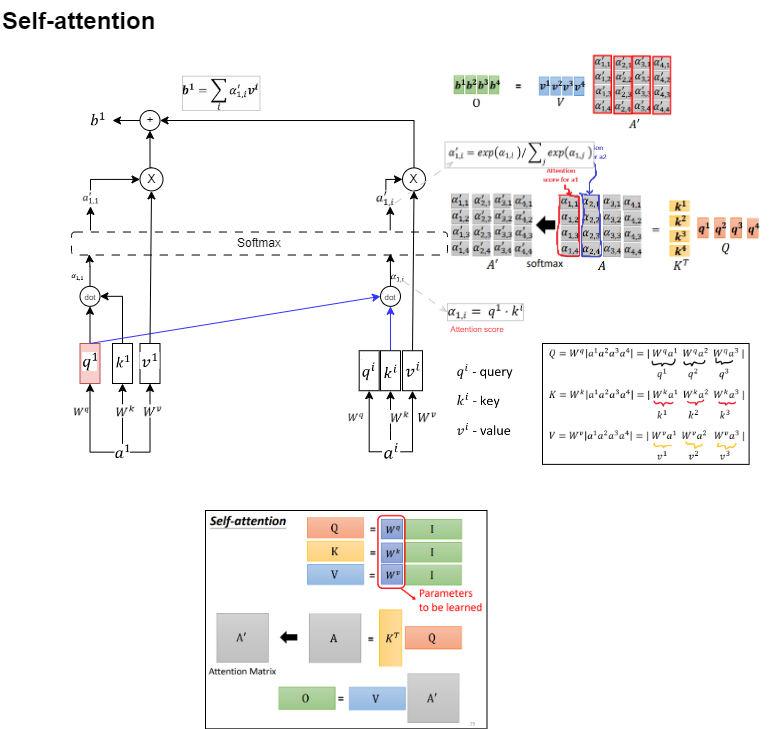

Self-attention Explained

Multi-headed Attention Explained

Please check out my write-up about the topic at Self-attention-and-multi-headed-attention.pdf

References

- Self-attention (1) by Hung-yi Lee Lecture

- DLS Course 5 by Andrew Ng

- Natural Language Processing with Transformers by Lewis Tunstall, Leandro Werra, and Thomas Wolf